I don’t normally add two entries to my blog on the same day but something attracted my interest. Somebody asked the question: “What is the point of Unit Testing?”

Actually, they asked two questions:

- Why do we perform Unit Testing, even though we are going to do System testing?

- What are the benefits of Unit Testing?

To which my immediate thought responses were:

- Would you deliberately make something from parts you knew were broken? Or

- Would you make something from parts which you suspected were broken?

And then I thought “that might sound a little rude” and reconsidered…

Modern development methods have lots of benefits, but sometimes in flexible and rapid methods something gets lost. People forget why things are done. Or, if they’ve never been told, they wonder if they are worth bothering with.

Now, you should always question everything, but sometimes things are there for a good reason. If you plan to take something away;

- Understand why it was there in the first place,

- If it is no longer needed, explain why it is no longer needed.

- Understand (and be prepared to live with) the consequences of taking it away.

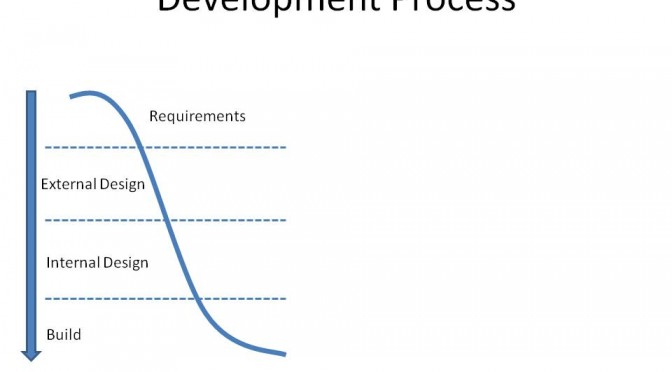

An old-fashioned view of a System Development Process

(by the way, you’ll notice that some of this material has been re-cycled from elsewhere)

If we take a rather old fashioned view of systems development using a “waterfall” model, then we will have a number of phases (an old IBM Development Process, but does n’t really matter).

- Each phase produces something, and the stage below it expands it (produces more “things”) and adds detail.

- The “Requirements” specify what things the system needs to do. They also identify the things that need to be visible on the surface of the system.

- For each of the things that need to be visible on the surface, we need an “External Design”

- The “External Design” specifies the appearance and functional behaviour of the system.

- For everything we have in the “External Design” we need a design for the “Internals”

- And finally someone needs the “Build” what we have specified.

You can view this as a waterfall, down the side of a valley. The process is one of decomposition.

I don’t especially recommend “Waterfall” as a way of running a development project, but it is a simple model which is useful as an illustration.

The Testing Process

On the other side of the valley we build things up.

- Units are tested.

- When they work they are aggregated into “Modules” or “Assemblies” or “Subsystems”, which are tested.

- These assemblies are assembled into the System which is tested as a whole.

- Finally the System is tested by representatives of the Users.

The process is one of developing “bits”, testing the bits and then assembling the bits and then testing the assembly.

The assembly process (in the sense of “putting things together”, not compiling a file written in “assembler”) costs time and effort. Parts are tested as soon as practical after they are created and are not used until they conform to their specification. The benefit is that we always working with things that we think work properly.

In a well-organised world, you would like to think that the Users are testing against the original requirements!

Development and Testing should be mutually supportive

What should happen is that at every level, each component or assembly should have some sort of specification (it may be a very rudimentary specification, but it should still exist) and it should be tested against that.

In fact, there is a thoroughly respectable development approach called “Test Driven Development”. The idea here is that the (Business) Analyst writes a “Test” which can be used to demonstrate that the system, at whatever level, is doing what it is supposed to be doing. Of course, the Analyst may need help to write an automated test, but the content should come from the Analyst.

This approach is really useful all the way through the development process. It’s a really good idea if a developer writes tests for the code s/he is writing before the code! In fact, I have known places where they insisted that a test was written for a bug before the developer attempted to fix the bug. That way demonstrating the fix was easy: Run the test without the fix – Test demonstrates the bug. Apply the fix and run the test again.

The Cost of Not Doing Unit (or other low-level) Testing

All bugs are found at the topmost level, which means that they are found after the product has been assembled or “built” and then we have to work out where the error has actually originated.

The Benefits of Unit Testing

- Bugs are found sooner, and they are found closer to the point at which they are created.

- Unit testing lends itself to automated testing which can be integrated with the build process. Ask a professional Java developer about “JUnit” or a Python developer about “UnitTest” (one word).

- Automated testing increases the chances of trapping “regression bugs” as code is enhanced and bugs are fixed.

All of the above mean that well-planned and executed Unit Testing results in:

- Reduced overall cost

- Improved product quality